Anyway, it ended quite nicely. The premise was simple and mostly solved by the end of this series. Another series done well by david production – too bad they aren’t doing anything (or at least as the main production team) for this (next) season. Oh well, hopefully we’ll get more interesting series from them sometime in the future.

Author Archives: nanaya

Shakugan no Shana

The first series I mostly download by myself. On weekly basis. Checking the tracker on release day back when there’s no Google Reader and TokyoTosho (or perhaps there were but I didn’t use them at the time).

I got to know Kugimin and learned term “tsundere”. Also light novels. Also Itou Noizi. Also system administration (guess how it’s related).

First season was a blast. Second season was pretty much content-less and the series of OVA didn’t add anything meaningful. Despite of them, the third – final – series certainly didn’t disappoint. Lots of things I liked on first series are back except Shana’s tsundere-ness thanks to her being much more honest than ever. Not that I hate it.

Also, great job, Eclipse (point-blank, anyone?). May the time come when I can buy the entire series’ Blu-Ray (or DVD?) – hopefully in R2.

Moebooru rebased

Since I forgot to branch the original source, the branching looked awesomely crappy. Therefore I decided to rebase entire thing to ease up keeping track with Moebooru “mainline”. All my commits are now in branch “default”. If you didn’t do any change, backout up to revision 9174b6b5b02d and then pull again. And then, don’t ever touch moe branch again anymore.

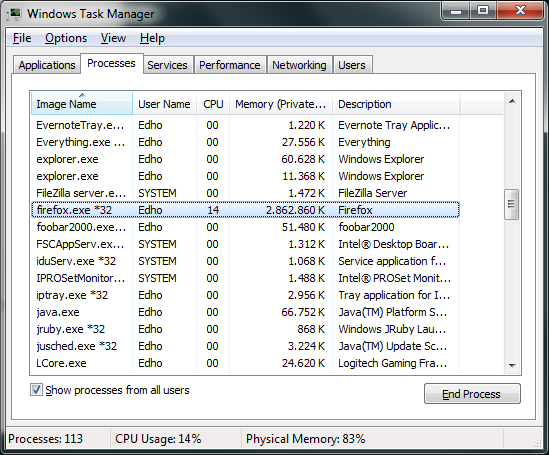

Firefox Memory Usage

moebooru again

Last week I posted about my random project which involves modernizing moebooru without doing complete rewrite (see this for yet another complete rewrite attempt).

Let’s revisit the plan:

- Upgrade to Ruby 1.9: done, need testing.

- Update all plugins: mostly done, can use some trimming.

- Update anything deprecated: nope

- Migrate to Bundler: done, not sure how to test.

- Use RMagick instead of custom ruby-gd plugin: nope

- Use RMagick instead of calling jhead binary: nope

- And more!: I hope you didn’t expect me to do more while there are incomplete items above.

Sure looks good. Need more testing though. There’s also one part which I totally had no idea why should be changed when upgrading to 1.9. Just grep for FIXME to see which it is and hopefully fix it up for me (or explain what it does).

As usual, having completed the work for today, live demo is up and open for everyone to break (…if there’s anyone, that is).

[ Live Demo | Repository ]

bcrypt in Debian

WARNING: using method below will lock yourself out when using emergency console since whatever crypt it’s using surely doesn’t understand bcrypt (as I experienced myself). Additionally, this solution won’t add bcrypt support to other applications using crypt interface like proftpd unless it’s started by preloading libxcrypt.so first (also from my own experience).

As much as Drepper want to pretend bcrypt is wrong solution, it actually gives one benefit: ease of switch to Linux. Some systems use bcrypt by default or configurable to use it. On other case, there might be time where you need system’s (or applications using system’s) crypt to handle bcrypt passwords from external system (usually web applications).

It’s quite difficult to enable bcrypt support in RHEL based distro as there is no libxcrypt and pam_unix2 packages available. Thankfully it’s available in Debian (and derivatives) in package libpam-unix2.

The README.Debian says to modify files in /etc/pam.d but if I remember it correctly, it confused apt PAM handling system or whatever. Fast forward few weeks, I discovered a better way to use it by creating PAM configuration in /usr/share/pam-configs. Since it’s mostly equivalent to normal pam_unix, I just copy and modify the file using this (long-ass) oneliner sed:

sed -e 's/pam_unix.so/pam_unix2.so/g;s/^Name: Unix authentication$/Name: Unix2 authentication/;s/pam_unix2.so obscure sha512/pam_unix2.so obscure blowfish rounds=8/;s/ nullok_secure//' /usr/share/pam-configs/unix > /usr/share/pam-configs/unix2

Then execute pam-auth-update, select Unix2 authentication and deselect Unix authentication. Don’t forget to update passwords for all other users as well or they won’t be able to login since pam_unix2 doesn’t recognize sha based hashes.

Actually, change all other users password to use md5 first before replacing the PAM with pam_unix2.

Update 2012-04-01: Removed nullok_secure since it isn’t supported.

Update 2012-06-09: Added warning.

FreeBSD is Rolling Release (the ports)

Don’t get tricked by the “release” system. Apart of the base system, FreeBSD perfectly qualifies as rolling release. I guess it’s also why the binary package management sucked so badly. You won’t find how to upgrade certain packages using binary method in their Ports’ UPDATING page.

Here’s the example:

20120225: AFFECTS: users of archivers/libarchive AUTHOR: glewis@FreeBSD.org libarchive has been updated to version 3.0.3, with a shared library bump. This requires dependent ports to be rebuilt. # portmaster -r libarchive or # portupgrade -r archivers/libarchive

You would think the dependent packages got version bump to ensure their proper dependency – but they didn’t. Instead you had to recompile everything depending on it.

And then there’s another case:

20120220: AFFECTS: users of graphics/libungif AUTHOR: dinoex@FreeBSD.org libungif is obsolete, please deinstall it and rebuild all ports using it with graphics/giflib. # portmaster -o graphics/giflib graphics/libungif # portmaster -r giflib or # portupgrade -o graphics/giflib graphics/libungif # portupgrade -rf giflib

Of course, ArchLinux kind of managed to do it but that’s a purely binary rolling release Linux distro. The maintainer worked hard to ensure such kind of thing get handled properly by all their users which mostly use binary packages. FreeBSD on other hand tried to claim capable of both but it really isn’t (unless I missed something).

I’m intending to contact pkgng creator to ask his opinion about this but have yet to do it…

Removing Annoying Speaker Static Noise

I’m not sure which sound cards are exhibiting this problem but at least it is in my system (onboard Realtek HD – Intel DH61BE motherboard running Windows 7 x64). It’s been annoying me since like forever and finally tonight I decided to actually solve the problem.

As it turns out, the solution is quite simple: disable PC Beep channel. A quick google showed this hit quite a bit of people and apparently this is the reason (or at least related).

On related note, apparently I’ve did this before and then completely forgotten. This is why I wrote it this time.

Manga Collection

An Attempt to Update moebooru Engine

If you didn’t know, the current moebooru running on oreno.imouto is using ancient version of many things. It also uses a custom lighty module (mod_zipfile) which doesn’t seem to be available anywhere.

I’ve updated it with latest Rails 2.x and made it compatible with nginx. Mostly. You can see it running here.

The plans:

- Upgrade to Ruby 1.9.

- Update all plugins.

- Update anything deprecated.

- Migrate to Bundler.

- Use RMagick instead of custom ruby-gd plugin.

- Use RMagick instead of calling jhead binary.

- And more!

We’ll see if I can actually finish this one. Grab the source here. Yeah, I’m using Mercurial for a Rails project.

nginx – gzip all the text

During my migration to other server, I recreated some of my configs and enabled gzip compression for most file types. Here’s the relevant config:

gzip on; gzip_vary on; gzip_disable "msie6"; gzip_comp_level 6; gzip_proxied expired no-cache no-store private auth; gzip_types text/plain text/xml application/xml application/json application/x-javascript text/javascript text/css;

It should cover most text-based content one will ever serve over the web. Probably.

Header Update

Apparently I forgot to restore original header when migrating the site. I decided to get a new header – an awesome (yuri) (ero)game cg from Hoshizora no Memoria.

To Heart 2 Dungeon Travelers OVA 1

On Comment System

I just migrated to Disqus – a fully managed comment system for websites. I saw it first used in Engadget. It didn’t work quite good at the time (or at least I didn’t have good memory on it) but it’s quite wonderful now.

One of the problem I’m having when leaving comment on other blogs is it’s difficult to track which posts and sites I’ve left a comment on. Some sites (like this site used to be) provide “Notify via E-mail on Replies” option but it’s clunky at best and with dozens, hundreds of sites out there it’s quite impossible to track them all. Not to mention you have to visit each blogs to unsubscribe from notification.

Then come Disqus – it’s a centralized comment system which allows any website to use their service and let the users enjoy one-stop interface to manage all their comments on various websites. It uses JavaScript to embed the comment interface on a page – not the best way but I guess it’s acceptable now with emergence of smartphones and tablets which actually capable of rendering JS.

Replacing WordPress’ comment system with Disqus is quite easy. The official WordPress plugin provides everything to migrate comments quickly and easily. It can even keep the comments synced with local database – allowing quick way out in case Disqus goes evil™.

I doubt anyone still read this blog (and blog is so 2009) but well, here it is.

Server changes, etc

Apparently now this blog is blazing fast. I’m not sure why but I sure can feel the difference.

I’ve done lots of changes on this site so I’m not sure which one did the trick most:

- Not using WordPress Multisite

- Moved to 64-bit OS

gzip-ed everything (er, most things)- Used MySQL 5.5

Yeah, those are the changes. Also this site moved to all my four VPS’. Last (this) one is in Hostigation. Hopefully the won’t have the network problem like the one occurred sometime last month anymore. I enabled collectd with ping plugin so I’ll have concrete data on what’s happened next time there’s network problem.

…and as it turns out, I set the expiration overly aggressive resulting in caching even the main page. Epic fail on my side.

Google: 1 – Me: 0

All right, after trying to create a good mail server solution for myself (and only myself), I gave up and went back to Google Apps. Maintaining mail server isn’t easy and having to maintain just for one person isn’t quite motivating.

A Dark Rabbit Has Seven Lives OVA

Apparently I missed this one. Released back in December, basically added nothing to story. At least it has the above awesome yuri-ish scene though (“-ish” since there’s certain circumstance).

Oh and at the end of this episode there’s awesome Fukuyama Jun (as this series’ Hinata) talking to himself (as LoLH’s Ryner).

Repositories

I’ve enabled Danbooru repository sync to Bitbucket again as I’m planning to work on it again – mainly upgrading to Rails 3 and reducing insanity required for installation. And perhaps import the danbooru-imouto changes back.

As usual, the WordPress mirror is still going.

nginx/php single config for SSL and non-SSL connection

This morning I noticed I haven’t upgraded WordPress MU Domain Mapping plugin to the latest version. It supposedly brings better SSL support. And after upgrading I couldn’t log in to my mapped domain blogs (e.g. this blog). Wasn’t it a great way to start my morning?

After some digging, I found out the problem was because I don’t have one PHP(?) parameter – HTTPS – passed properly. It should set to True whenever one is using SSL connection otherwise there’s no way the PHP process can know if the connection is secure or not. Previous version of WPMUDM have a bug in which skips SSL check but in turn enables using HTTPS even without such parameter. Decided it’s my fault (I believe it would completely breaks phpMyAdmin), adding the parameter then I did.

But it’s not that simple: I’m using unified config for both my SSL and non-SSL connection’s PHP include. Splitting the config would make the duplication worse (it’s already relatively bad as it is) so that’s not an option. Using the evil if is also not a solution since it doesn’t support setting fastcgi_param inside it.

Then the solution hit me. The map module – a module specifically made for things like this and to avoid usage of if. I tested it and indeed worked as expected.

Here be the config:

...

http {

...

map $scheme $fastcgi_https {

https 1;

default 0;

}

...

server {

...

location ~ .php$ {

...

fastcgi_param HTTPS $fastcgi_https;

}

...

And WordPress MU Domain Mapping is now happy.

Update 2012-02-20: nginx version 1.1.11 and up now have $https variable. No need to have that map anymore.

Ore no Kanojo to Osananajimi ga Shuraba Sugiru chapter 8

Hello there. This manga has fucking long title. And the scanlator decided to skip on giving DDL after their previous attempts in using watermark. Inb4 they reinvent SecuROM.

Ripped from batoto with following command:

for i in {1..37}; do

curl -O "$(curl http://www.batoto.net/read/_/76060/ore-no-kanojo-to-osananajimi-ga-shuraba-sugiru_ch8_by_japanzai/$i

| grep 'img src="http://img.batoto.com/comics/'

| sed -E 's/.*src="([^"]+)".*/1/')"

done

Whoopsie, as it turns out, the files are actually gif. Brb fixing them.

Here be fix:

for i in *.png; do mv $i ${i%%.*}.gif; done

for i in *.gif; do convert $i -flatten ${i%%.*}.png; rm $i; done

Links updated to fixed pack.

[ Fileserve | myconan.net ]

Hitsugi no Chaika chapter 2

I almost forgot about this series. DDL, etc. Just as previous chapter, this one also scanlated by viscans.

Update: what, the title is obviously Hitsugi no Chaika.

[ Fileserve | myconan.net ]

This pile of crap called OpenLDAP

In attempt to learn THE directory service called LDAP, I tried to setup OpenLDAP in Scientific Linux. The install went all right and slapd can be immediately started without much problem. Except that the config is one big mystery and there’s not even a rootpw defined by default. Being a complete newbie in LDAP thingy, I decided to build configuration and all from zero.

…except that it’s not actually trivial. Most examples/tutorials are for OpenLDAP prior to 2.4 which still uses slapd.conf which was obsoleted in favor of configuration in meta-format using LDAP’s ldif. Instead of one nice config, we have directories called cn=config etc inside slapd.d. Someone must’ve been into Linux too much (xxx.d – Linux users sure love “modularizing” their configs).

Anyway, the example in manual page of slapd-config doesn’t even work because the include syntax was wrong (should be file:///etc/… instead of /etc/…) and even after fixing that there still an error:

[root@charlotte openldap]# slapadd -F /etc/openldap/slapd.d -n 0 -l initman.ldif str2entry: invalid value for attributeType olcSuffix #0 (syntax 1.3.6.1.4.1.1466.115.121.1.12) slapadd: could not parse entry (line=626) _#################### 100.00% eta none elapsed none fast! Closing DB... [root@charlotte openldap]# slaptest slaptest: bad configuration file!

The example from the guide also gives exact same error.

In short, I kind of given up and tried to follow the “Quick Start” from the very same guide. Instead of using slapd.d format, it still uses slapd.conf format despite it being a guide for 2.4. Seems like following a pattern, the config example also spit out error:

[root@charlotte openldap]# vi slapd.conf [root@charlotte openldap]# slaptest /etc/openldap/slapd.conf: line 2: invalid DN 21 (Invalid syntax) slaptest: bad configuration file!

So much for an example. Few attempts later at both methods, I gave up and wrote this post.

Compiling PuTTY for Windows

Because of one awesome bug inflicts eye-cancer when using Consolas font and deactivated “Bold text is a different colour”, I had to recompile PuTTY by hand (more like, by gcc). I initially tried to compile the PuTTYTray one but apparently they successfully mixed C and C++ code and completely broke the build procedure using mingw. Or I missed something obvious.

Anyway, I went back to vanilla PuTTY. As it turns out, compiling using latest mingw’s gcc isn’t a good idea since it removed -mno-cygwin option and therefore broken unless you do some magic edit. Thanks to that, I stopped bothering trying to compile it under Windows and used mingw-gcc for Linux (which is able to produce Windows binary). Here be the steps from beginning. Tested on Debian 6.

apt-get install mingw32 subversion perl svn co svn://svn.tartarus.org/sgt/putty putty cd putty perl mkfiles.pl cd windows make VER="-DSNAPSHOT=$(date '+%Y-%m-%d') -DSVN_REV='$(svnversion)' -DMODIFIED" TOOLPATH=i586-mingw32msvc- -f Makefile.cyg putty.exe

Patch is done before make (duh) and the diff can be found here. If you’re lazy (like me) you can just download the build at my server (link at bottom). Should be virus-free but I guess you can notify me if you encounter one. Built everyday until it breaks.

- exe: the program

- sha512: hash of the program

- zip: both program and its checksum

Love, Election, and Chocolate gets anime adaptation

Koi to senkyo to chocolate, an eroge from sprite just announced to get anime adaptation.

Koi to senkyo to chocolate, an eroge from sprite just announced to get anime adaptation.

From ANN:

The January issue of ASCII Media Works’ Dengeki G’s Magazine is announcing a 2012 television anime adaptation of sprite/fairys’ Love, Election, & Chocolate (Koi to Senkyo to Chocolate) adult game on Wednesday. The 2010 Windows game follows the protagonist at a “mega academy” with over 6,000 students. To save his cooking club from being abolished, the protagonist agrees to run for student body president.

The art was good and as usual I have no idea what the story is about 😀

And again, as usual, I need to prepare for art quality degradation compared to original art. At least I’m not a big fan of Akinashi Yuu (and Koichoco being the only eroge art he/she ever done) though it’ll still be sad seeing it degrades.

No information on animation studio or pretty much everything else.

Hitsugi no Chaika chapter 1

Here be fileserve link to Hitsugi no Chaika chapter 1 which seems to be recommended by Kurogane.

Here’s the bash command I used to leech from this batoto.com thingy. Originally one-liner but reformatted for readability:

for i in {1..66}; do

curl -O "$(curl http://www.batoto.net/read/_/52737/hitsugime-no-chaika_ch1_by_village-idiot/$i

| grep 'img src="http://img.batoto.com/comics/'

| sed -E 's/.*src="([^"]+)".*/1/')"

done

Yes, I parsed html using sed/regex 😀

Flame away.

PS: Apparently I fail at leeching shit. Should’ve checked file formats before deciding it’s jpg. Just extract the files and rename the files with correct extension if you’re feeling dilligent (or getting broken image with MMCE). Only page 1-4 are jpg.

PS: whatever. Fixed everything. Added ddl from my own site since I want to waste some bandwidth.

[ Fileserve | myconan.net ]

W3 Total Cache

Test post to see if this plugin actually works.

Here be the magical line:

try_files /wp-content/w3tc-$host/pgcache/$uri/_index.html $uri $uri/ /index.php?q=$uri&$args;

Doesn’t use gzip, css, etc yet.

Nanoha AMV

Oh hello there certain group of people which I’ve fed this video several times 😀

[youtube http://www.youtube.com/watch?v=upMXNs2ZcEs&w=640&h=390]

The Movie 2nd A’s where.

TIL WordPress supports HTML5 YouTube embedding.

WordPress Multisite with nginx (subdomain/wildcard domain ver.)

WordPress Multisite, previously known as WordPress MU (Multi User), is an application which allows hosting multiple WordPress blogs with just one installation. Instead of creating copies of WordPress for each users’ blogs, one can use one installation of Multisite to be used by multiple users, each with their own blogs. Personal/custom domain is also possible as used for this blog (this blog’s master site is genshiken-itb.org). Too bad, the official documentation only provided guide for installing on Apache. If you haven’t known, I usually avoid Apache – I simply more proficient with nginx. Of course, this blog is also running on nginx therefore it’s perfectly possible to run WordPress Multisite on nginx.

At any rate, reading the official documentation is still a must and this post will only cover the nginx version of Apache-specific parts (namely Apache Virtual Hosts and Mod Rewrite and .htaccess and Mod Rewrite) and only for subdomain install. Subdirectory one will or will not follow some time later.

Assuming you have working nginx and php-cgi (with process manager like php-fpm or supervisord), for starter you’ll want to create a specific file for this WP install. Let’s say this file named app-wordpress.conf. Obviously you have put WordPress installation somewhere in your server. In this example I put the files in /srv/http/genshiken-itb.org/.php/wordpress. Its content:

client_max_body_size 100m;

root /srv/http/genshiken-itb.org;

location /. { return 404; }

location / {

root /srv/http/genshiken-itb.org/.php/wordpress;

index index.php;

try_files $uri $uri/ /index.php?$args;

rewrite ^/files/(.*) /wp-includes/ms-files.php?file=$1;

location ~ .php$ {

try_files $uri =404;

fastcgi_pass unix:/tmp/php-genshiken.sock;

fastcgi_read_timeout 600;

fastcgi_send_timeout 600;

fastcgi_index index.php;

fastcgi_param SCRIPT_FILENAME $request_filename;

include fastcgi_params;

}

}

Simple enough. Actually, it’s exact same with normal WordPress install except one extra line:

rewrite ^/files/(.*) /wp-includes/ms-files.php?file=$1;

And you’re set. Note that I set fastcgi timeouts higher than default to work around the slow performance of Amazon EC2 Micro Instance. Should only needed on network upgrade and massive blog import.

Anyway, in your main nginx.conf file, put:

server {

listen 80;

server_name *.genshiken-itb.org;

include app-wordpress.conf;

}

In proper place. The usefulness of separate file for WordPress configuration will become apparent once you want to tweak performance for some blogs. I’ll explain that later if I feel like to.

Solaris 10 Patch Where?

If you haven’t noticed, Solaris 10 is not available for free anymore. At least the patches. It’s Oracle after all.

Security patches were originally available for free when Sun stil exists but not anymore now. From PCA site:

Unlike before, even security patches are not available for free anymore.

So you’re screwed if you don’t have one. You’re better off installing OpenIndiana instead.

In case you’re one of the lucky folks (like me /hahahaha) having office Oracle account with Solaris support contract, I suggest checking out PCA to ease up installing patches. Also make sure to install it through OpenCSW for easiest update method.

User Management in Solaris 10

We’re back with Solaris 10 administration series. This time, it’s the user management part.

Securing the Password

For God knows why reason (probably legacy), the default password hashing algorithm in Solaris 10 is the classic UNIX DES hashing. To change it, edit /etc/security/policy.conf and find line starting with CRYPT_DEFAULT and change it to this:

CRYPT_DEFAULT=2a

(you can also set to other value but 2a should be good enough)

And to change the root password, first edit /etc/shadow and append $2a$ to the 2nd (password) field like this:

root:$2a$afgfdg....:...

or else chaning the root password using passwd won’t be set using the newly configured algorithm.

Creating User

First of all remember that there’s character limit of 8 for username in Solaris. Linux doesn’t have this but it’ll break ps (displaying UID instead of username). Also creating directory in /home is not possible because of several reasons. The proper way is to create home directory somewhere and create relevant entry in /etc/auto_home.

useradd -s /bin/bash newuser mkdir -p /export/home/newuser chown newuser:staff /export/home/newuser printf "%st%sn" "newuser" "localhost:/export/home/newuser" >> /etc/auto_home passwd newuser

This will let Solaris to automount (loopback filesystem/lofs) the actual directory (in this case /export/home/newuser) to /home.

Of course you can set the directory somewhere else, though having home not in /home feels weird.

![[Commie] Inu × Boku SS - 12 [938E7B85].mkv_snapshot_21.00_[2012.04.01_19.32.49]](https://uploads.nanaya.net/wp-content/uploads/sites/2/2012/04/Commie-Inu-×-Boku-SS-12-938E7B85.mkv_snapshot_21.00_2012.04.01_19.32.49-1024x576.jpg)

![[SS-Eclipse] Shakugan no Shana Final - 24 (1280x720 Hi10P) [4B866A6E].mkv_snapshot_16.02_[2012.04.01_13.04.29]](https://uploads.nanaya.net/wp-content/uploads/sites/2/2012/04/SS-Eclipse-Shakugan-no-Shana-Final-24-1280x720-Hi10P-4B866A6E.mkv_snapshot_16.02_2012.04.01_13.04.29-1024x576.jpg)

![[SubSmith] ToHeart2 Dungeon Travelers OVA 01 - Total Disaster [1080p 10bit][76539452].mkv_snapshot_17.32_[2012.03.01_13.32.09]](https://uploads.nanaya.net/wp-content/uploads/sites/2/2012/03/SubSmith-ToHeart2-Dungeon-Travelers-OVA-01-Total-Disaster-1080p-10bit76539452.mkv_snapshot_17.32_2012.03.01_13.32.09-1024x576.jpg)

![[Derp]_Itsuka_Tenma_no_Kuro_Usagi_OVA_[DVD-480p-AAC][96C8A866].mkv_snapshot_17.09_[2012.02.12_21.18.37]](https://uploads.nanaya.net/wp-content/uploads/sites/2/2012/02/Derp_Itsuka_Tenma_no_Kuro_Usagi_OVA_DVD-480p-AAC96C8A866.mkv_snapshot_17.09_2012.02.12_21.18.37-500x281.jpg)